In the field of AI, deep learning is an effective method for achieving AI implementation. It is widely used in research areas such as cosmology, physics, computer vision, fusion and healthcare. Since its algorithms rely on massive and diverse sample data, the loading speed becomes a critical factor that restricts the efficiency of model training.

High-cost AI compute chips, like GPUs, require system architectures and training parameters to maximize computational power. As model parameters and data grow exponentially, the demand for computing power increases rapidly, placing higher demands on underlying I/O devices like SSDs.

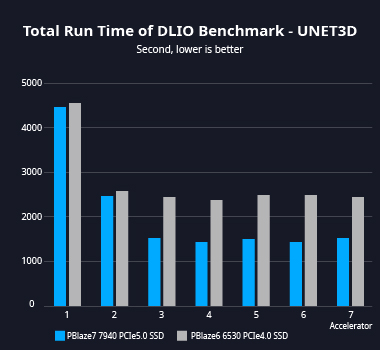

Using DLIO Benchmark deep learning I/O performance testing tool, a single PBlaze7 7940 PCIe 5.0 SSD saves nearly 1,000 seconds over 5 epochs when loading 3TB of training data in the Unet3D model training test.

Compared to PCIe 4.0 SSDs, the PBlaze7 7940 saved 42.6% of elapsed time in the test, which helps training tasks complete faster.

Typical AI model training tasks go through dozens or even hundreds of epochs, and a lot of time is saved for more training tasks, bringing higher benefits and returns.

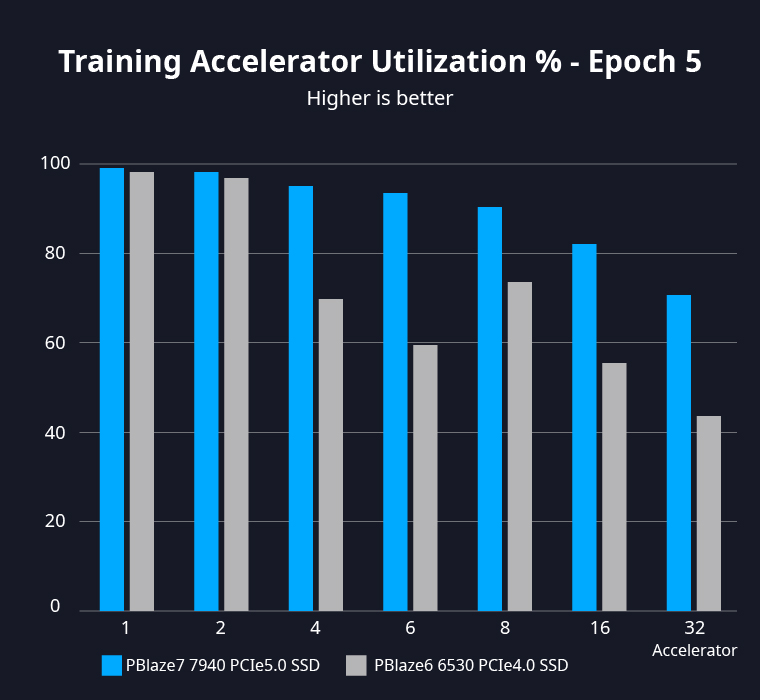

In a test environment configured with 8 GPUs, a single PBlaze7 7940 SSD can still maintain these GPUs at over 90% utilization.

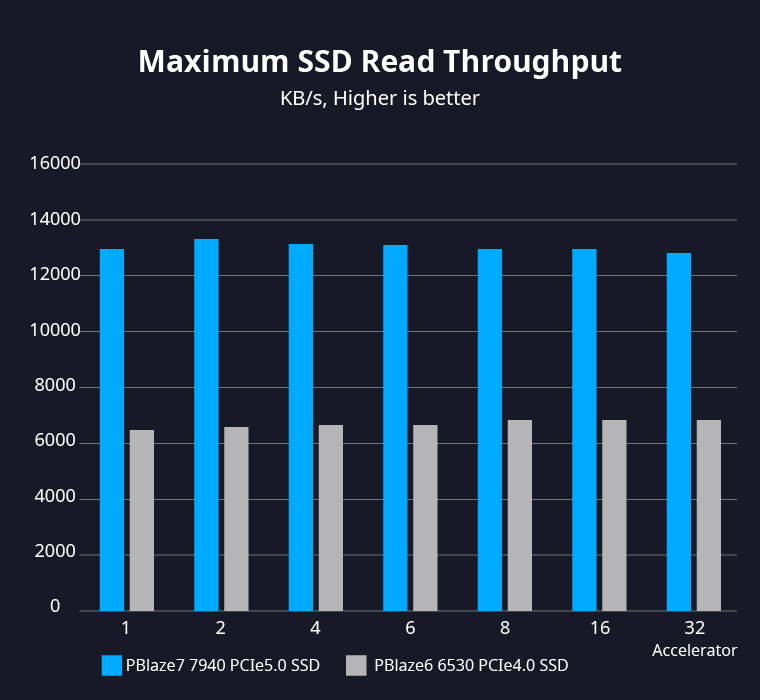

A single PBlaze7 7940 SSD delivers more than 10GB/s of I/O processing speed to the GPU in the Unet3D training task.

During the training data loading session, the PBlaze7 7940 SSD reads data at nearly full speed, dramatically reducing the performance impact of SSD read operations.

In training tasks with large datasets that can't all be kept in memory, increasing NVMe SSD performance effectively reduces the time memory spends waiting for data.

Delivers 14GB/s sequential read performance and 2800K IOPS 4K random read performance, both twice that of PCIe 4.0 SSDs.

Up to 30.72TB with 2.5-inch form factor, and 15.36TB with E1.S form factor, doubling the level of capacity while maintaining 2X performance.

Significantly reduces the number of servers and switches required for the same level of storage, allowing a greater number of accelerators to be configured, laying the groundwork for a sustained increase in the level of computing power.